+ Elias Sohnle Moreno

The Danish flexicurity model is an example of how a government is trying to reap the benefits of the gig economy while preventing workers from experiencing the precarious living conditions that are by-products of the changing labor market.

Source: link

+ Martin Bernal Dávila

This implies that Central Banks and governments will not have complete control over their currency because of the blockchain's decentralized nature.

+ Elias Sohnle Moreno

The metaverse is an abstract concept. It can refer to various things depending on the context in which the concept is used. The term metaverse has been attached to so many ideas that it has stopped having a common meaning. It is normal to be confused by the concept.

+ Elias Sohnle Moreno

According to Jody Medich, the current state of graphical user interface (GUI) “limits our ability to visually sort, remember and access information, provides a very narrow field of view on content and data relationships and does not allow for data dimensionality”. Extended reality technology enables the visual representation of information in a three-dimensional space, unlocking the users’ ability to fully utilize spatial cognition in the digital world. Humans have evolved to thrive in a 3-dimensional environment, and the leap from traditional GUIs to VR brings tremendous implications for human interaction with the virtual world.

Source: link

+ Elias Sohnle Moreno

Sometimes companies like Facebook have incentives that conflict with the end-users' interests.

Source: link

+ Kim Tan

In my whole college experience, I would say that one of the most life-changing moments I had was when my professor introduced the Design Thinking principle. Design Thinking positively advocates observation and empathy to derive the best creative, innovative solutions to fulfill consumers' needs. Ever since then, I've adapted and applied the human-centered mindset in various aspects as an individual and a business major, completely redefining my outlook on conscious innovation/technology/business.

One book I highly recommend for this is Creative Confidence by brothers Tom and David Kelley.

+ Stefanie Sewotaroeno

I'm so glad that this gets its own focus, as lately, I have been wondering: what will be illegal in the future (for health reasons)? For example, asbestos was widely used in construction not too long ago, but now it is banned. Cocaine is another example. We have medications in our cabinets that are accompanied by a long sheet with possible side effects, ranging from common to rare. Will one or more of these medications become illegal in the future? If yes, why? I'm very curious about how this will develop in the future.

+ Diede Kok

We sometimes forget how much progress humanity has made in the medical field over the last century. It was only 1884 when Theodore Roosevelt's wife and also his mother died on the same day due to post-pregnancy kidney failure and typhoid, respectively, leading the president to write: 'the light has gone out of my life.' Many commonplace ailments are now reduced to the history books, and the despair that Roosevelt felt is increasingly rare, preventing millions of people from losing their light due to losing a loved one. Source: Leadership In Turbulent Times, by Doris Kearns Goodwin, p.125.

+ Benjamin Von Plehn

Very interesting to see how AI or ML already has an impact on our health.

There is also robot-assisted surgery that I find interesting. The surgery is done with precision, miniaturization, smaller incisions, decreased blood loss, less pain, and quicker healing time. These are mainly used in the US and are soon to be found more often in Europe.

Source: link

+ Elias Sohnle Moreno

Beyond the sociological benefits of diversity, genetic diversity protects humans against diseases and favors adaptability. Loss of genetic diversity could present an existential threat for the species in the long run in the event of future pandemics.

+ Elias Sohnle Moreno

Here is another very interesting debate in game theory when it comes to gene editing. It is safe to say that European ethical safeguards will bridle the application of gene editing. But what if other nations engage in widespread adoption of gene editing, notably to enhance their population. Would this prompt Western nations to do the same, at the expense of moral and ethical safeguards? Would nations accept the potential emergence of "genetically superior" humans in the long term? The disparities in ethical and moral standards across nations are very important in the case of gene editing.

+ Elias Sohnle Moreno

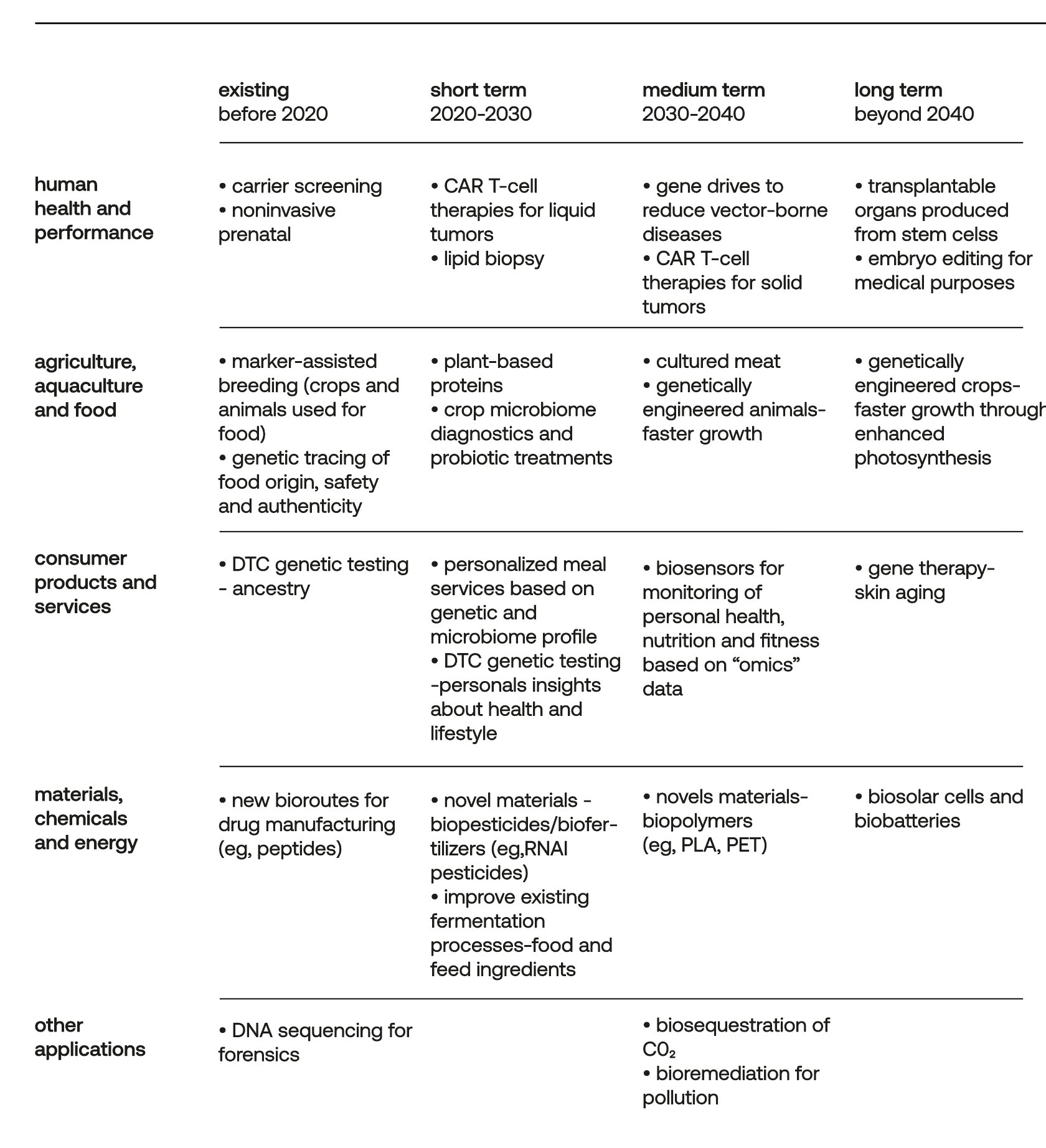

We've been voluntarily influencing genes for thousands of years via selective breeding of crops and livestock.

+ Kim Tan

According to the National Foundation for Transplants, a standard kidney transplant can cost an estimated $300,000 or more, while a 3D bio-printer used to create 3D printed organs will only cost $10,000 - $200,000. Furthermore, scientists at Wake Forrest are currently testing 3D machines to enable skin printing directly to the patient's body — a hopeful innovation for burn and trauma victims.

The future of 3D healthcare technology does not just revitalize optimism for individuals waiting for an organ donor, but it also reduces the possibilities and dangers of organ trafficking. Will this also be new profound hope for a safer world?

Read more in these sources: link, link

+ Elias Sohnle Moreno

Some work is already being done, like the work Neuralink is doing on brain implants and brain-computer interfaces.

Source: link

+ Elias Sohnle Moreno

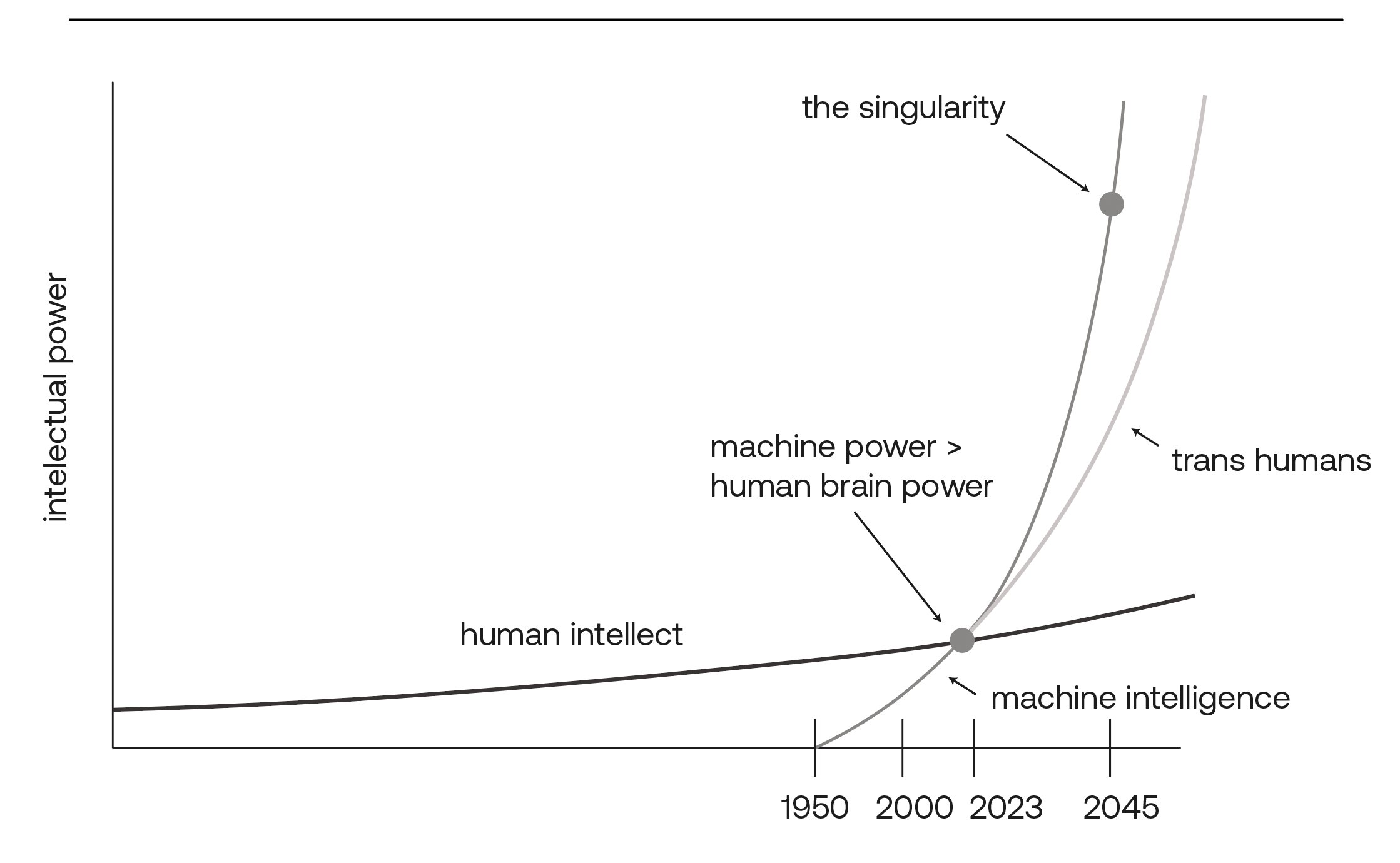

Stephen Hawking expressed his concerns about humanity's ability to handle a technological singularity, seeing extinction as one of many possible scenarios. "Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all". Interestingly, in the same chapter, Stephen Hawking notes: "Intelligence is characterized as the ability to adapt to change. Human intelligence is the result of generations of natural selection of those with the ability to adapt to changed circumstances. We must not fear change. We need to make it work to our advantage."

+ Nadine Kanbier

Some interesting recommendations on this topic worth reading are: 'Weapons of math destruction' - Cathy O'Neil (well known in the subject), 'Coded Bias' (Netflix docu) by Joy Buolamwini, 'Follow Algorithmic Justice League' (they're doing a ton of work on the subject, combining it with art as well).

+ Camera Ford

Safiya Noble, a digital media scholar and Professor of Gender Studies and African American Studies at the University of California Los Angeles (UCLA), wrote a book called “Algorithms of Oppression” about exactly this. She studies how the internet and digital technologies reproduce racial and gender power dynamics. The book shows that search engines are not objective sources of information. Instead, search engine results actually reflect (and are heavily influenced by) social values and economic incentives (for example, advertising) determined by the dominant power structure, leading to the spreading of stereotypes, propaganda, and even political extremism such as white nationalism.

Source: link

+ Lara Hemels

Noema Magazine, specifically their articles related to philosophy and technology (particularly AI), is an additional source to look into. The question of what exactly distinguishes humanity vs technology will become increasingly relevant as AI advances and integrates further into our lives.

+ Elias Sohnle Moreno

Increasingly, the term "human-centered" is replacing "user-centered" when it comes to product development, highlighting a shift in how companies view the consumer. In Web 3.0, every user is also a creator with unique needs and wants, expecting a highly tailored customer experience.

+ Gianluca Ariello

An interesting consequence of the more and more frequent use of technological devices in our daily lives is that we are becoming increasingly dependent on them for many tasks. Some studies have been done recently to analyze the effects of this on brain development, especially focusing on the newest generation, which has been feared to be excessively dependent on mobile devices for accessing information and for social interaction. Surprisingly, no negative correlation has been found so far, leaving with the only worry about the possible consequences of not having access - for any reason - to the internet and/or digital devices.

Source: link